Home · Book Reports · 2018 · Building Machine Learning Systems With Python

- Author :: Willi Richert and Luis Pedro Coelho

- Publication Year :: 2013

- Read Date :: 2018-03-23

- Source :: Building_Machine_Learning_Systems_with_Python.pdf

designates my notes. / designates important.

Thoughts

This book is by no means “the definitive guide” to machine learning. It is more a kind of starter kit, and even that might be pushing it. It assumes you already have some foundational skills in the mathematics, though it does give some light insight.

The code/examples started off great, but they get wonky fast. Sometimes the names of variables seem to change midway through an example, where ’target' becomes ’labels’ and such. Other parts of the code don’t work period. It could be because the book is a little old (2013), in computer years.

the array comparison features[:,2] < #2 works, but not when used as

if feature[:,2] < 2:

print "yes"

else:

print "no"

The datasets are not included and I had some difficulty finding some of them. For example the 20newsgroups data is easy to find, but does not work with the code in the book. Actually, I think the book has a companion site, but you need to log in/verify purchase to access the data.

The further I get the less complete code there is. Playing with the example code is how I get a better understanding of what is actually going on, without it I am at a disadvantage. It will also show plot after plot with no code showing of how they were generated. It does give a description, but given that I am a beginner, I was often unable to understand exactly what they were doing.

I am disappointed in the book overall. I don’t want to put it down, it simply didn’t fulfill what I wanted it to fulfill. I want a nice hand-holding experience so that I can see exactly what the code is along with line by line explanations of what is happening. This is not that book. I don’t think what I want will be found in a 2-300 page book; there is too much information.

On the bright side, it did give me more exposure to the concepts and helped reinforce my grammar on the subject, likely making further forays into machine learning more palatable.

The last chapter switches gears and the book discusses a python library, jug, that can be used for cluster computing. Amazon Web Services are also covered as a quick and easy way to get a computational cluster up and running quickly.

It does have a detailed table of contents, something many other books lack these days.

Code

This is probably not very worthwhile, but here is the little bit of code I tinkered with in relation to this book:

- boston_houses.py

- clustering.py

- iris_classification.py

- knn.py

- recursive_feature_elimination.py

- web_traffic.py

Links

In case you don’t have a conversion tool nearby, you might want to check out sox: http://sox.sourceforge.net. It claims to be the Swiss Army Knife of sound processing, and we agree with this bold claim.

http://metaoptimize.com/qa – This Q&A site is laser-focused on machine learning topics.

Table of Contents

- 01: Getting Started with Python Machine Learning

- 02: Learning How to Classify with Real-world Examples

- 03: Clustering – Finding Related Posts

- 04: Topic Modeling

- 05: Classification – Detecting Poor Answers

- 06: Classification II – Sentiment Analysis

- 07: Regression – Recommendations

- 08: Regression – Recommendations Improved

- 09: Classification III – Music Genre Classification

- 10: Computer Vision – Pattern Recognition

- 11: Dimensionality Reduction

- 12: Big(ger) Data

- Actual page numbers.

· 01: Getting Started with Python Machine Learning

page 9:

-

You will even find that a simple algorithm with refined data generally outperforms a very sophisticated algorithm with raw data. This part of the machine learning workflow is called feature engineering…

-

http://metaoptimize.com/qa – This Q&A site is laser-focused on machine learning topics.

page 15:

- index an array with an array:

a = np.array([0,1,77,3,4,5])

>>> a[np.array([2,3,4])]

array([77, 3, 4])

- use np.array’s to trim outliers:

a = np.array([0,1,77,3,4,5])

>>> a[a>4] = 4

>>> a

array([0, 1, 4, 3, 4, 4])

- As this is a frequent use case, there is a special clip function for it, clipping the values at both ends of an interval with one function call as follows:

>>> a.clip(0,4)

array([0, 1, 4, 3, 4, 4])

- dealing with NaN:

# let's pretend we have read this from a text file

c = np.array([1, 2, np.NAN, 3, 4])

>>> c

array([ 1., 2., nan, 3., 4.])

>>> np.isnan(c)

array([False, False, True, False, False], dtype=bool)

>>> c[~np.isnan(c)]

array([ 1., 2., 3., 4.])

>>> np.mean(c[~np.isnan(c)])

2.5

page 16:

- in every algorithm we are about to implement, we should always look at how we can move loops over individual elements from Python to some of the highly optimized NumPy or SciPy extension functions.

page 17:

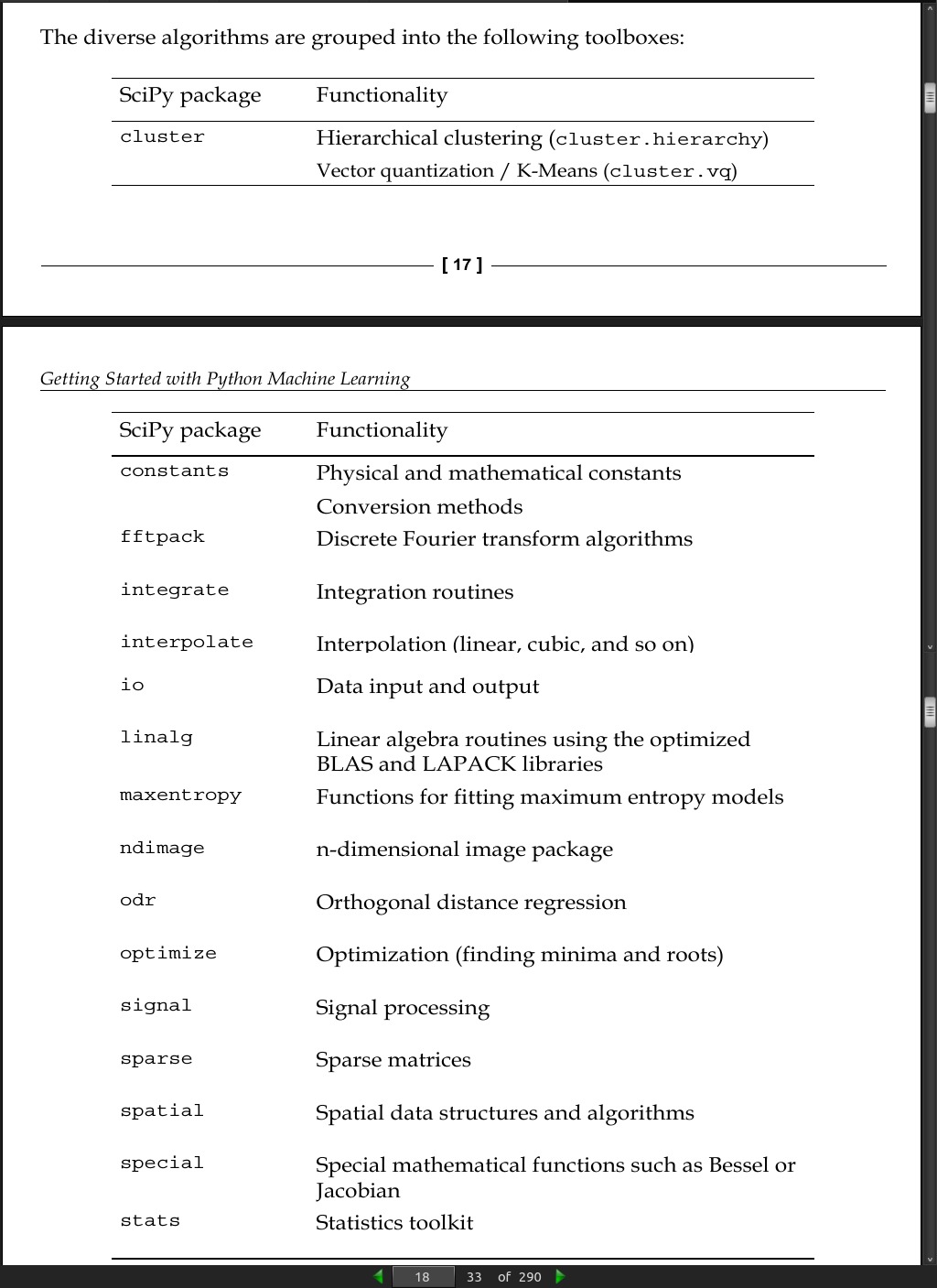

- the complete namespace of NumPy is also accessible via SciPy.

page 18:

page 22:

- This error will be calculated as the squared distance of the model’s prediction to the real data. That is, for a learned model function, f, the error is calculated as follows:

def error(f, x, y):

return sp.sum((f(x)-y)**2)

page 24:

- Although our first model clearly is not the one we would use, it serves a very important purpose in the workflow: we will use it as our baseline until we find a better one.

· 02: Learning How to Classify with Real-world Examples

page 34:

- The following four attributes of each plant were measured:

Sepal length

Sepal width

Petal length

Petal width

-

In general, we will call any measurement from our data as features.

-

This is the supervised learning or classification problem; given labeled examples, we can design a rule that will eventually be applied to other examples.

page 42:

- The University of California at Irvine (UCI) maintains an online repository of machine learning datasets (at the time of writing, they are listing 233 datasets). Both the Iris and Seeds dataset used in this chapter were taken from there. The repository is available online: http://archive.ics.uci.edu/ml/

page 43:

- One interesting aspect of these features is that the compactness feature is not actually a new measurement, but a function of the previous two features, area and perimeter. It is often very useful to derive new combined features. This is a general area normally termed feature engineering; it is sometimes seen as less glamorous than algorithms, but it may matter more for performance (a simple algorithm on well-chosen features will perform better than a fancy algorithm on not-so-good features).

· 03: Clustering – Finding Related Posts

page 59:

- SnowballStemmer(“english”) from nltk.stem does not work… there is no English language.

page 60:

-

we want a high value for a given term in a given value if that term occurs often in that particular post and very rarely anywhere else.

-

This is exactly what term frequency – inverse document frequency (TF-IDF) does; TF stands for the counting part, while IDF factors in the discounting. A naive implementation would look like the following:

import scipy as sp

def tfidf(term, doc, docset):

tf = float(doc.count(term))/sum(doc.count(w) for w in docset)

idf = math.log(float(len(docset))/(len([doc for doc in docset if term in doc])))

return tf * idf

page 61:

- For the following document set, docset, consisting of three documents that are already tokenized, we can see how the terms are treated differently, although all appear equally often per document:

>>> a, abb, abc = ["a"], ["a", "b", "b"], ["a", "b", "c"]

>>> D = [a, abb, abc]

>>> print(tfidf("a", a, D))

0.0

>>> print(tfidf("b", abb, D))

0.270310072072

>>> print(tfidf("a", abc, D))

0.0

>>> print(tfidf("b", abc, D))

0.135155036036

>>> print(tfidf("c", abc, D))

0.366204096223

-

We see that a carries no meaning for any document since it is contained everywhere. b is more important for the document abb than for abc as it occurs there twice.

-

In reality, there are more corner cases to handle than the above example does. Thanks to Scikit, we don’t have to think of them, as they are already nicely packaged in TfidfVectorizer, which is inherited from CountVectorizer. Sure enough, we don’t want to miss our stemmer:

from sklearn.feature_extraction.text import TfidfVectorizer

class StemmedTfidfVectorizer(TfidfVectorizer):

def build_analyzer(self):

analyzer = super(TfidfVectorizer, self).build_analyzer()

return lambda doc: (

english_stemmer.stem(w) for w in analyzer(doc))

vectorizer = StemmedTfidfVectorizer(min_df=1,

stop_words='english',

charset_error='ignore')

- Could not get 20newsgroup data to load…

· 04: Topic Modeling

page 75:

- LDA: latent Dirichlet allocation, which is a topic modeling method; and linear discriminant analysis, which is a classification method. They are completely unrelated, except for the fact that the initials LDA can refer to either.

page 78:

-

Sparsity means that while you may have large matrices and vectors, in principle, most of the values are zero (or so small that we can round them to zero as a good approximation). Therefore, only a few things are relevant at any given time.

-

Often problems that seem too big to solve are actually feasible because the data is sparse. For example, even though one webpage can link to any other webpage, the graph of links is actually very sparse as each webpage will link to a very tiny fraction of all other webpages.

· 05: Classification – Detecting Poor Answers

page 110:

- What we want is to have a high success rate when we are predicting a post as either good or bad, but not necessarily both. That is, we want as many true positives as possible. This is what precision captures:

Precision = True Positive/(True Positive + False Positive)

- If instead our goal would have been to detect as much good or bad answers as possible, we would be more interested in recall:

Recall = True Positive/(True Positive + False Negative)

page 112:

- better description of a classifier’s performance: the area under curve (AUC). This can be understood as the average precision of the classifier and is a great way of comparing different classifiers.

· 06: Classification II – Sentiment Analysis

- Naive Bayes and tweets.

· 07: Regression – Recommendations

page 154:

- These penalized models often go by rather interesting names. The L1 penalized model is often called the Lasso, while an L2 penalized model is known as Ridge regression. Of course, we can combine the two and we obtain an Elastic net model.

· 08: Regression – Recommendations Improved

· 09: Classification III – Music Genre Classification

page 182:

- In case you don’t have a conversion tool nearby, you might want to check out sox: http://sox.sourceforge.net. It claims to be the Swiss Army Knife of sound processing, and we agree with this bold claim.

page 190:

- There is a sister of precision-recall curves, called receiver operator characteristic (ROC) that measures similar aspects of the classifier’s performance, but provides another view on the classification performance. The key difference is that P/R curves are more suitable for tasks where the positive class is much more interesting than the negative one, or where the number of positive examples is much less than the number of negative ones. Information retrieval or fraud detection are typical application areas. On the other hand, ROC curves provide a better picture on how well the classifier behaves in general.

page 191:

- When comparing two different classifiers on the same dataset, we are always safe to assume that a higher AUC of a P/R curve for one classifier also means a higher AUC of the corresponding ROC curve and vice versa. Therefore, we never bother to generate both.

page 197:

-

…we used features that we understood only so much as to know how and where to put them into our classifier setup. The one failed, the other succeeded. The difference between them is that in the second case, we relied on features that were created by experts in the field.

-

And that is totally OK. If we are mainly interested in the result, we sometimes simply have to take shortcuts—we only have to make sure to take these shortcuts from experts in the specific domains.

· 10: Computer Vision – Pattern Recognition

page 220:

- We focused on mahotas, which is one of the major computer vision libraries in Python. There are others that are equally well maintained. Skimage (scikit-image) is similar in spirit, but has a different set of features. OpenCV is a very good C++ library with a Python interface. All of these can work with NumPy arrays and can mix and match functions from different libraries to build complex pipelines.

· 11: Dimensionality Reduction

page 221:

-

Superfluous features can irritate or mislead the learner. This is not the case with all machine learning methods (for example, Support Vector Machines love high-dimensional spaces). But most of the models feel safer with less dimensions.

-

Another argument against high-dimensional feature spaces is that more features mean more parameters to tune and a higher risk of overfitting.

-

The data we retrieved to solve our task might just have artificial high dimensions, whereas the real dimension might be small. - Less dimensions mean faster training and more variations to try out,

resulting in better end results.

- If we want to visualize the data, we are restricted to two or three dimensions. This is known as visualization.

page 222:

- principal component analysis (PCA), linear discriminant analysis (LDA), and multidimensional scaling (MDS).

page 223:

- Given two equal-sized data series, it returns a tuple of the correlation coefficient values and the p-value, which is the probability that these data series are being generated by an uncorrelated system. In other words, the higher the p-value, the less we should trust the correlation coefficient:

from import scipy.stats import pearsonr

pearsonr([1,2,3], [1,2,3.1])

#(0.99962228516121843, 0.017498096813278487) #(p,r)

pearsonr([1,2,3], [1,20,6])

#(0.25383654128340477, 0.83661493668227405)

-

In the first case, we have a clear indication that both series are correlated. In the second one, we still clearly have a non-zero value.

-

This only works on linear relationships.

page 225:

- For non-linear relationships, mutual information comes to the rescue.

page 226:

- For convenience, we can also use scipy.stats.entropy([0.5, 0.5], base=2). We set the base parameter to 2 to get the same result as the previous one. Otherwise, the function will use the natural logarithm via np.log(). In general, the base does not matter as long as you use it consistently.

page 227:

page 231:

-

A real workhorse in this field is RFE, which stands for recursive feature elimination. It takes an estimator and the desired number of features to keep as parameters and then trains the estimator with various feature sets as long as it has found a subset of the features that are small enough. The RFE instance itself pretends to be like an estimator, thereby, wrapping the provided estimator.

-

In the following example, we create an artificial classification problem of 100 samples using the convenient make_classification() function of datasets. It lets us specify the creation of 10 features, out of which only three are really valuable to solve the classification problem:

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import make_classification

X,y = make_classification(n_samples=100, n_features=10, n_informative=3, random_state=0)

clf = LogisticRegression()

clf.fit(X, y)

selector = RFE(clf, n_features_to_select=3)

selector = selector.fit(X, y)

print(selector.support_)

# [False True False True False False False False True False]

print(selector.ranking_)

# [4 1 3 1 8 5 7 6 1 2]

page 233:

- Principal component analysis is often the first thing to try out if you want to cut down the number of features and do not know what feature extraction method to use. PCA is limited as it is a linear method…

page 236:

- Linear Discriminant Analysis (LDA) comes to the rescue here. It is a method that tries to maximize the distance of points belonging to different classes while minimizing the distance of points of the same class.

page 237:

- Multidimensional scaling (MDS) tries to position the individual data points in the lower dimensional space such that the new distance there resembles as much as possible the distances in the original space. As MDS is often used for visualization, the choice of the lower dimension is most of the time two or three.

· 12: Big(ger) Data

page 243:

- jug is a python package that allows you to memorize or take advantage of multiple cores/clusters.

{% endfilter %} {% endblock %}